Information Inequality in the Age of AI: Who Gets to Be Seen?

The AI Lens

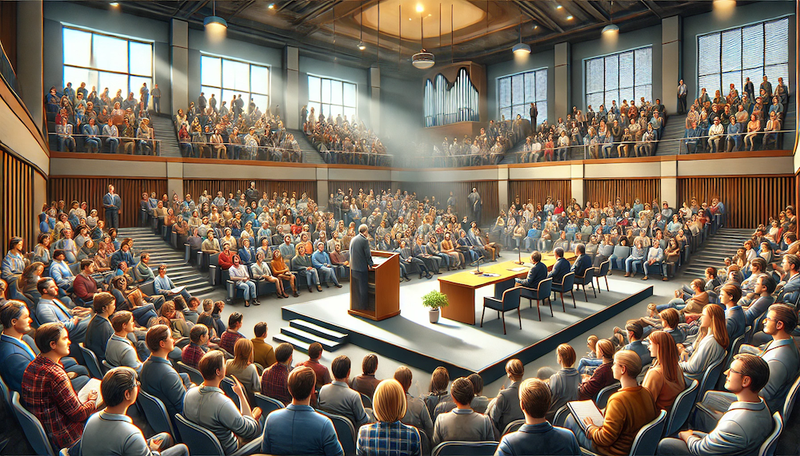

Look at this image I generated using the OpenAI model, DALL.E. To create it, I input a prompt into ChatGPT 4.0.: "generate an image of a busy lecture hall, full of university community members eagerly awaiting a lecture by an esteemed academic".

In the resulting image, who is the esteemed academic? Who are the audience members? The answer is clear: many men, many white people. This is a snippet of how AI models perceive the world and reflect it back to us. Granted, the image also demonstrates that AI mostly sees people as garbled messes. Still, the issue remains, the image reflects a world we have been striving to leave behind and balance out.

A Real-Life Lecture Hall: Sandi Toksvig at Cambridge

In reality, on Friday 21st February 2025, I sat in a busy lecture hall at the University of Cambridge, awaiting the Darwin Lecture by the esteemed writer and broadcaster, Sandi Toksvig. A short, blonde-haired woman is certainly not who DALL.E predicted we'd be waiting for, nor, perhaps, did it expect that I and the many women in the audience would be there at all.

Sandi's lecture, Eve's Byte of the Apple, explored the evolution of information and how women are represented within it. She took us from the emergence of writing to today’s large language models - the very kind that generated my AI image. Sandi spoke about how information recording has been used to repress certain populations: how illiterate people were subjugated when writing first developed, and how minorities continue to be disempowered today by the patriarchal, "tech bro" control of the internet.

What Women? Where?

Sandi described a "deeply flawed system", in which 50% of the population are represented by just "0.5% of all recorded history". This is no new revelation to an academic audience who (I would hope) is familiar and informed about the gender bias embedded in the great annals of the University Library. Afterall, the University itself only began allowing women to graduate in 1948.

Yet Sandi urged us to consider a more immediate, and somewhat insidious challenge to information equality today. We might expect that the dusty old books in academic libraries may have an obscured view of the so-called "weaker sex". We may have learned to approach them with scepticism. But what about the slurry of information available at our fingertips today from the vast library of the internet? Can we trust that it offers a more balanced perspective?

Algorithmic Gatekeepers: The Internet’s New Biases

Sandi explained that not only is accurate digital information about minority groups lacking, but access to this information is also being systematically curtailed. According to Sandi, the very systems designed to distil information - the algorithmic gatekeepers - are exacerbating existing biases.

Sandi described the internet as the new frontier for misogynistic views, a "manosphere", where “women are sexy, restrained or not there at all”. She described modes of subjugating women-centred content: shadow banning, restricting images as "sexually suggestive", and drive-by deletions. Each method of information control restricts efforts to balance digital information and ensure fair representation of women.

AI and the Amplification of Bias

We rely on AI-generated information for speed and ease, but the quality of this information is increasingly under scrutiny. Large language models are trained on human-labelled datasets, meaning that biases embedded in these sources are amplified exponentially. The AI-generated image at the beginning of this post is a case in point - why did ChatGPT default to an audience dominated by white men? Likely because the biases present in its training data shaped its response. We must ask who is shaping these models? Are they themselves white men? Are they considering other perspectives?

Sandi left us with a sobering thought:

"What if much of the diet we're given - what shapes us - is wrong?"

A Stall to Action: The Mappa Mundi Project

Listening to this lecture, Sandi's frustration with the state of digital information today was obvious, and it resurfaced these feelings in me. We learned that her honourable Mappa Mundi Project, launched with much excitement in 2023 when she became a Bye-Fellow at Christ’s College, had stalled due to financial constraints.

The project aimed to create "a global interactive digital platform telling women’s stories worldwide" to actually do something about the gender bias in online information, and create an alternative to Wikipedia, where, as Sandi pointed out,"85% of entries are by and about men". The stalling of this ambitious project was not only an obvious disappointment to Sandi, but to all of us who seek to combat the erasure of women and minorities from human knowledge.

The Future of Information Equality

Sandi Toksvig’s lecture left me with a renewed sense of urgency. If AI models continue to reflect outdated, biased perspectives, they risk reinforcing and legitimising these imbalances. The responsibility falls on us to become guardians of trusted knowledge - to challenge, correct, and actively reshape the narratives being written into our digital future.

Because if we don’t, AI will simply keep generating images of lecture halls where people like Sandi Toksvig - and the women in her audience - don’t exist at all.

Listen to Sandi Toksvig's full lecture here: Eve's Byte of the Apple - Sandi Toksvig, Darwin Lecture